Today and tomorrow, I am attending the ZIP-SCENE Conference in Prague. The conference is part of the Art*VR Festival at the DOX Center for Contemporary Art.

When I saw the program, I was surprised that my proposal was accepted at all. Most of the presentations are about VR, XR, video games, etc. So my 2D vertical videos stand out a bit. I tried to choose a framing that placed the viewing of vertical videos in the context of the audiovisual landscape, which also includes VR. Some colleagues certainly noticed this, but they were kind enough to keep their doubts about compatibility to themselves.

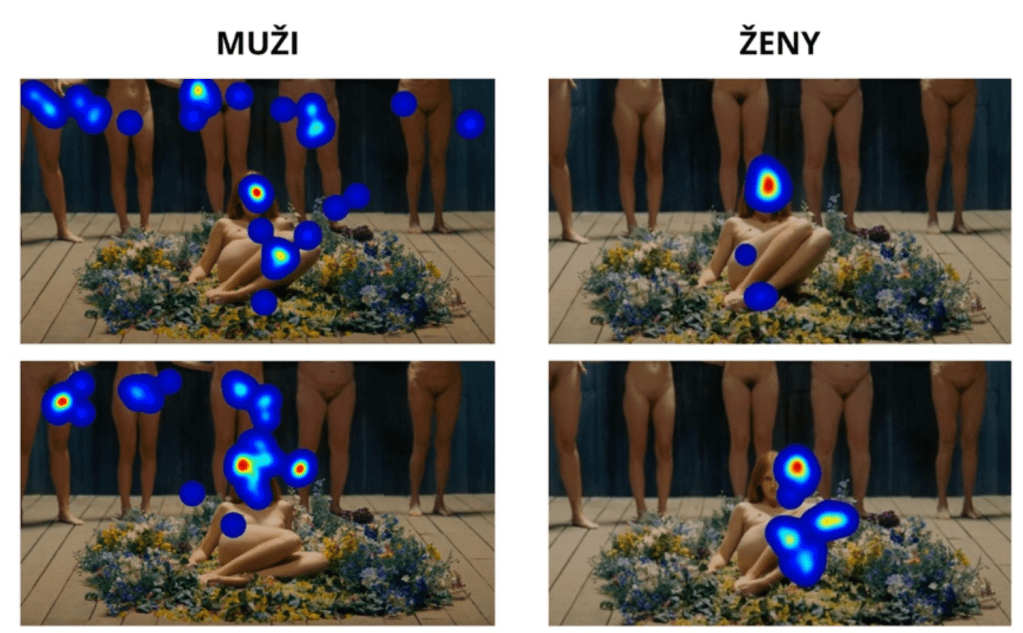

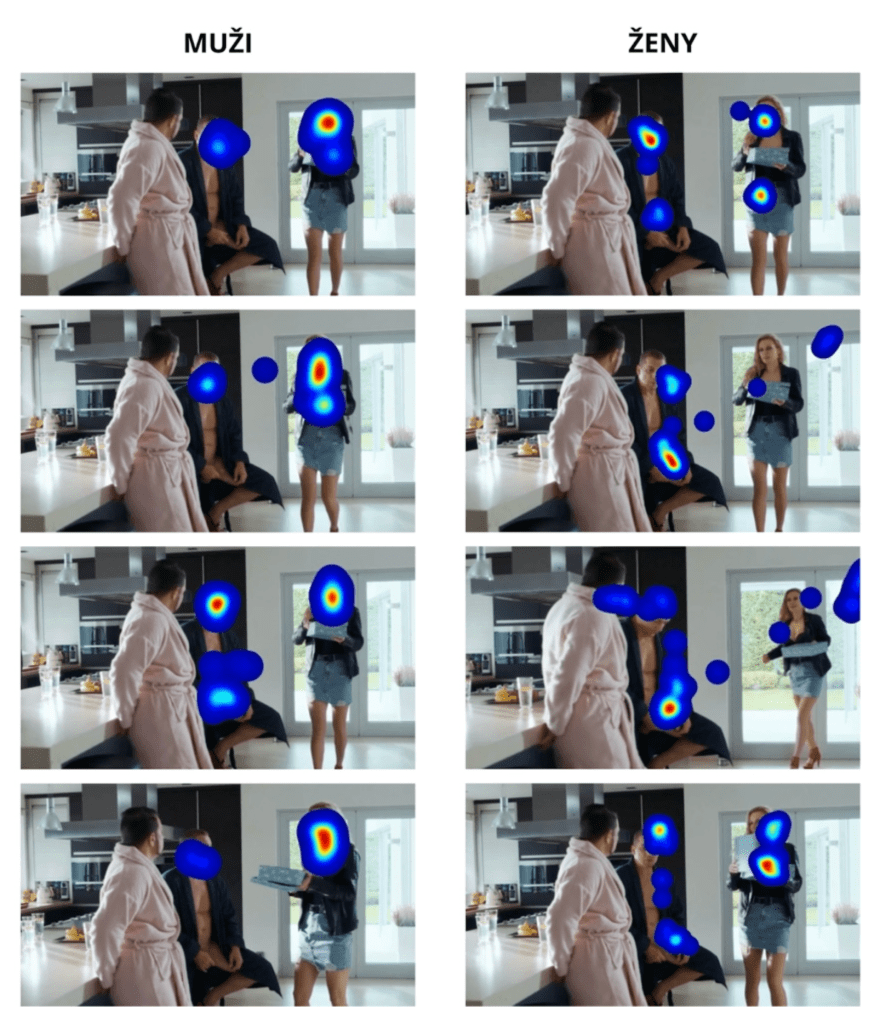

On the contrary, I received positive feedback, and the main finding that vertical framing causes fragmented viewing surprised several colleagues. (I would like to write more here, but only after we publish it in an article we are preparing with Szonya Durant and Adam Ganz).

Nevertheless, from the other contributions, I had the impression that I had found a similarly minded audience. The ZIP-SCENE Conference is a great achievement by Ágnes Karolina Bakk‘s team. This is already the seventh year, and they have succeeded in bringing together artists and researchers from various fields who think not only about what they create, but also about how it affects the audience.

I was most interested in the following presentations:

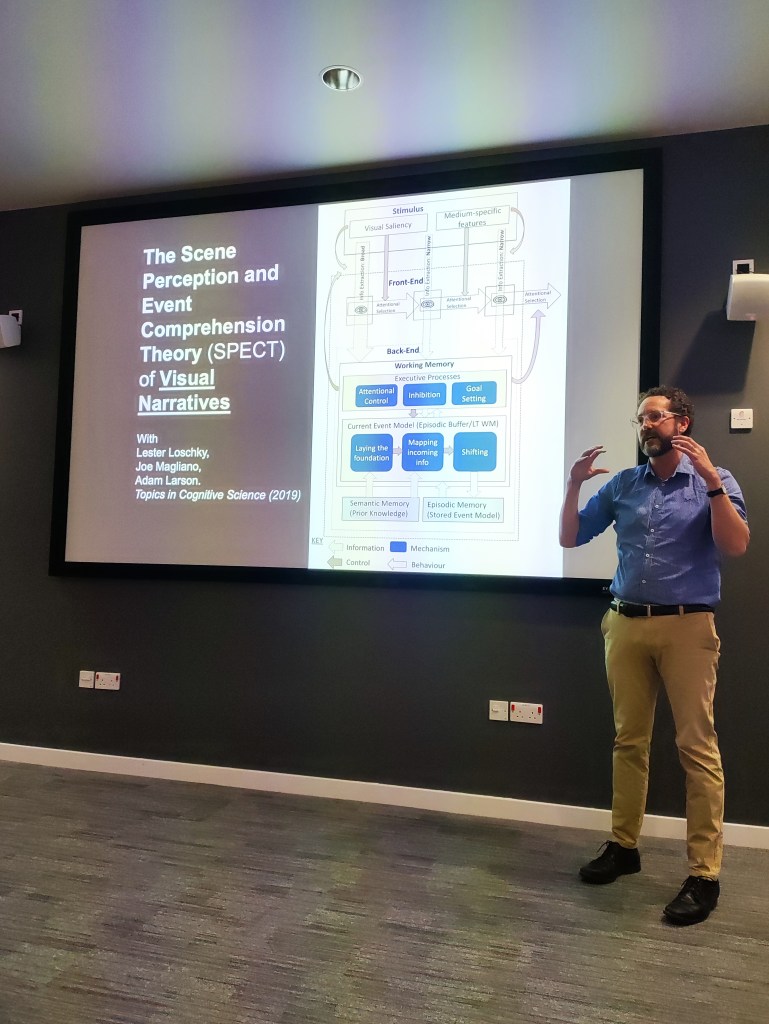

Niels Erik Raursø presented the research activities of the Augmented Performance Lab at Aalborg University in Denmark. They are investigating how to use EEG to analyze audience responses to storytelling. In theory, this could lead to greater personalization of storytelling for the needs of individual viewers in the future. I would probably need to hear more about their research, because in this 15-minute presentation I identified some methodological problems. For example, I think they could easily fall into the trap of choosing the wrong way to segment the narrative. In any case, I found Niels’ research extremely inspiring.

Another thought-provoking presentation was by Felix Carter, Iain Glichrist, and Danae Stanton-Frasert. They experimentally explored how a change in the narrative affects the audience’s attention towards exogenous cues. In my opinion, their research potentially shows how big a difference there can be in how we understand films. Not that we encounter different editing of films in cinemas (although that is also possible). I am referring to the simple fact that not all of us pay full attention to films throughout their duration. We look at our mobile phones while watching a film, we go to the fridge or the toilet, we talk to people around us, and films on TV are interrupted by commercials.

The last contribution I want to mention from today’s program was presented by Pavel Srp. He talked about an experiment in which they tested whether a linear or logarithmic function is more suitable for determining the distance of a sound source in relation to volume. The conclusion was that a logarithmic function is more suitable. Pavel’s paper is the third I have heard at the conference in a short time from “sound people,” and all three were excellent and provided great insight into audience perception.

And since I was at the Art*VR festival, I wanted to try out what it’s like to be in virtual reality. In short, after five minutes I felt sick and it took me about an hour to recover. But tomorrow I’ll give VR another chance.