As part of the AI bootcamp with Farshad, we tested models that enable photo and video segmentation. In this blog, I’d like to show Meta’s SAM2 application to two cases from the history of audiovisual culture where the model failed. At the same time, I want to demonstrate that far from criticizing SAM2, I find it a fascinating tool.

Before I go on to introduce the examples, I want to explain what is meant by segmentation. For film studies graduates, this term can be confusing because it means something different than what we are used to. In the context of AI analysis of photos and videos, it means entity selection within the photo and video. It is therefore a spatial segmentation that we can select as the area of interest a person, an animal, an object, but also houses, roads, trees or the sky. If we are talking about temporal segmentation – I was instructed by Farshad – we are talking about chapterization. As I wrote, this is unusual for a film studies graduate, but it makes sense.

Now let’s go to the examples. There’s no point in me trying to introduce Meta’s SAM2 model here, because this website does that quite successfully. When I tried the demo, I was naturally wondering how to fool the model. It’s not nice of me, but I couldn’t shake the impression that the sample videos in the demo are too easy to detect.

I knew I wanted to pick out some cases from the history of audiovisual culture, but which ones? What will show the AI segmentation capabilities? So I set some criteria:

– a black and white film

– poor quality video

– crowd scene

– shape change

I then considered the selection of a particular film and scene. In the list were crowd scenes from Soviet war movies, night scenes from horror movies, scenes with T1000 from Terminator 2… I also considered testing the twins (Social Network, Dead Ringers), but that ended up being very easy for SAM2.

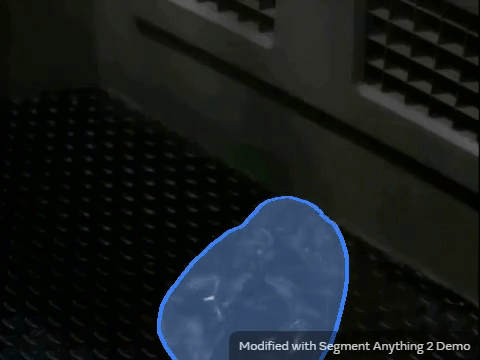

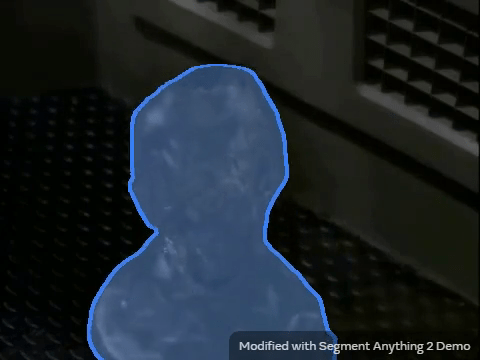

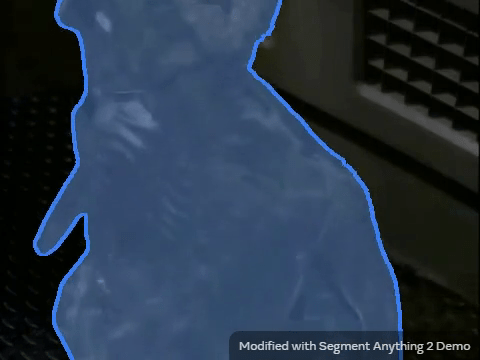

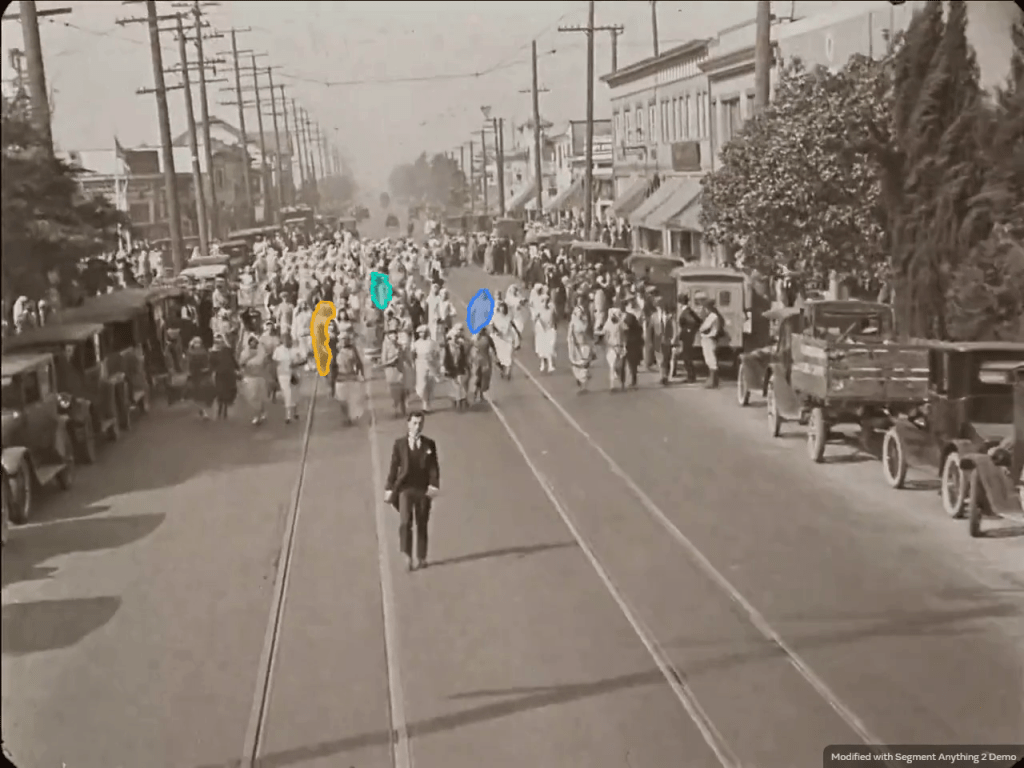

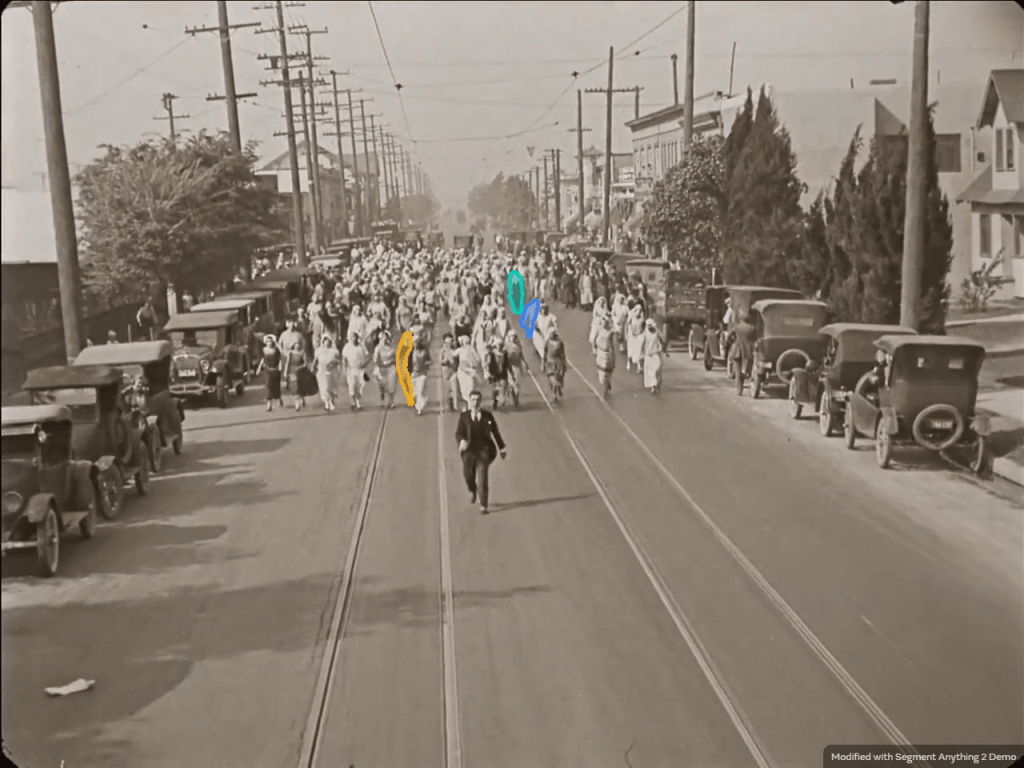

In the end, I chose the chase scene from Seven Chances, where the main character is chased by a hoard of brides. The second demo was to include Odo, the shapeshifter from Star Trek: Deep Space Nine. I was very lucky, because these videos were available on youtube. Let’s take a look at the result.

In the chase demo, SAM 2 was tasked with tracking three selected brides. We can see that he was only 2/3 successful. While the orange and blue brides were tagged the entire time, a third green tag traveled between several brides.

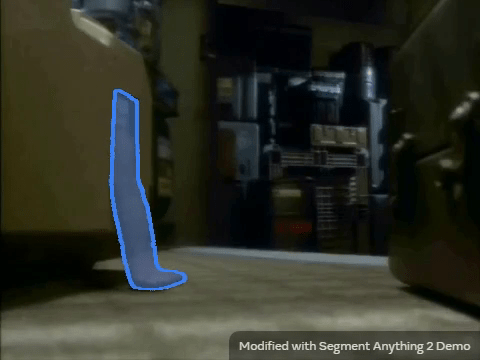

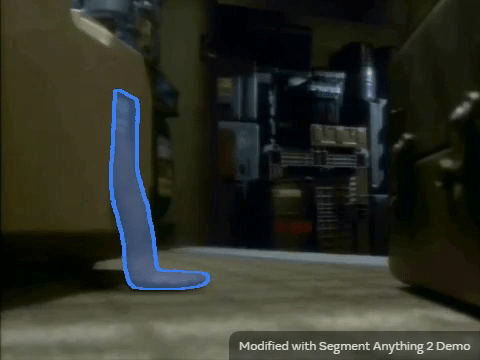

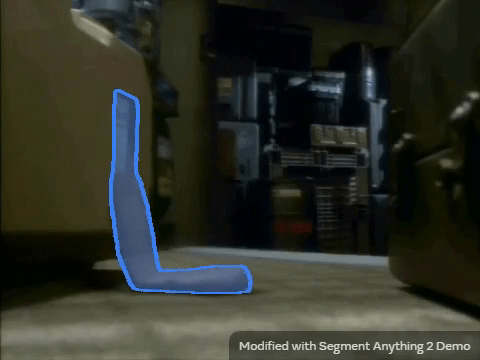

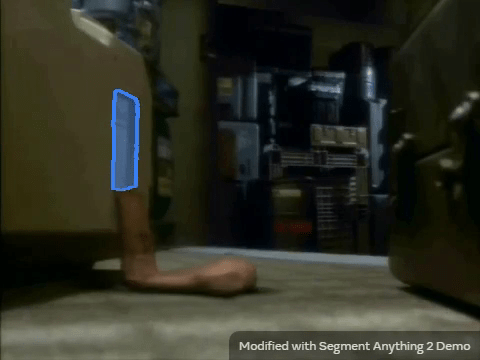

As far as Odo is concerned, SAM2 only succeeded sometimes. Here in the demo, we can see that the tendency to stick to the shape of the original object prevailed. I was under the impression that SAM2 is more successful when Odo changes from or to human form, but I don’t have exact data on that.

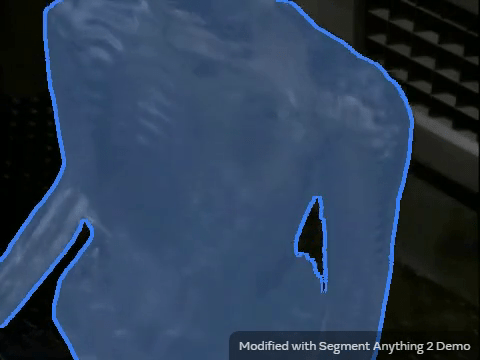

But the biggest shock for me was in this clip. I accidentally picked out part of the following scene as well, and was surprised that SAM 2 was able to identify Odo even after the cut (in the last two images).

Now, I could go on to criticize SAM2 for not demonstrating a 100% success rate. I could also speculate that there is no substitute for genuine analysis done by a human. I could certainly also speculate on what AI analysis reports when it lacks subjectivity. I’ll leave all that to others.

I will confine myself here to amazement at what a computer model can do. Certainly it can’t yet replace humans, but I can imagine how it could enable us within film studies to analyse a much larger number of films in a short time than we are able to do now. (This was, incidentally, the first thing Peter Krämer replied to me when I emailed him.) I can also imagine quite well that AI will prepare the analytical basis, which the analyst will then work with further. Actually, I don’t see many reasons why we don’t use AI already and the ones I see don’t make much sense to me.